This article was first published on the WeChat public account: New Wisdom. The content of the article belongs to the author's personal opinion and does not represent the position of Hexun.com. Investors should act accordingly, at their own risk.

1 New wisdom yuan finishing: Zhang Yishu Chang

[New Zhiyuan Guide] What role does the new generation of human-computer interaction technology play in terminal intelligence? What are the challenges of semantic interaction technology and smart speaker technology? Can China launch a speaker that can dominate the market or is at least widely accepted by consumers? At the closed forum of the June 100th People's Association held by Xinzhiyuan and the Android Green Alliance and the Institute of Automation of the Chinese Academy of Sciences, the profound explanations and thinking collisions of many academic and industrial experts may bring you some inspiration. What technologies are related to smart terminals? What role does the new generation of human-computer interaction technology play in it? What are the challenges of voice interaction technology? What is the development trend of the next stage of artificial intelligence? What value should smart home control provide to users? Can you make an ideal smart speaker by stringing together the technologies of voice recognition and microphone array? Can China launch a speaker that can dominate the market or is at least widely accepted by consumers?

In order to try to answer these questions, Xinzhiyuan invited a number of academic and industrial experts from the June, 100-member closed-door forum jointly organized by the Android Green Alliance and the Chinese Academy of Sciences Automation, from technology, application, difficulty, value, and business. Modes, prospects and other aspects explore human-computer interaction and terminal intelligence issues, and strive to enable participants to have a comprehensive understanding of the development and trends of the new generation of human-computer interaction, and get some inspiration.

Experts involved in the discussion include

Sorted by expert speech, the same as below:

Zhang Baofeng, Huawei CBG Software Engineering Department VP, Terminal Smart Engineering Department

Tao Jianhua, Deputy Director, State Key Laboratory of Pattern Recognition, Institute of Automation, Chinese Academy of Sciences

Zhao Feng, Vice President and CTO of Haier Home Appliances Industry Group

Sun Fuchun, Deputy Director, State Key Laboratory of Intelligent Technology and Systems, Tsinghua University

Huang Wei, co-founder and CEO of Yunzhisheng

Ding Yi, co-founder of the spirit

Cheng Wei, Director of Innovation Incubation, Microsoft Asia Pacific R&D Group

The 100-member will be hosted by Ms. Yang Jing, the founder of Xinzhiyuan.

|

Ms. Yang Jing was the Deputy Director of Media Purchasing and Consulting of Zenith Media (2002-2010) and China Economic Net Business Consultant (2010-2014). In 2014, he planned and hosted a series of artificial intelligence and big data theme seminars such as “Singularity Approachingâ€, “Algorithm Empire†and “Social People and Robots in the Age of Big Dataâ€. In March 2015, he co-hosted the “New Smart Times Forum†with the Machinery Industry Press, and was invited to be the Intelligent Social Technology Expert Forum of the 2015 China Association for Science and Technology, the 2015 Robot World Cup Industry Summit, and the World Robotics Conference. The new era of robots is the sub-forum host. In September 2015, Xinzhiyuan was founded. In March 2016, the monograph “Xinzhiyuan Machine+Human=Super Intelligent Age†was published. In October 2016, the World Artificial Intelligence Conference was jointly hosted and the “China Artificial Intelligence Industry Development Report†was published.

After Ms. Yang Jing gave a welcome speech, Zhao Hong, the director of the three-party testing department of Huawei CBG Software Engineering Department and the representative of Android Green Alliance, also gave a warm welcome speech.

|

Zhang Baofeng: AI scare index and the three pain points of the terminal smart future

Zhang Baofeng, Huawei CBG Software Engineering Department VP, Terminal Smart Engineering Department, responsible for the development and delivery of terminal AI software. He used to be the deputy director of Huawei Noah's Ark Lab, responsible for medium and long-term technical research in the field of data science. His research interests include data mining, machine learning and artificial intelligence. Member of China Nuclear High-Level Expert Group and member of China CCF Big Data Expert Committee.

Zhang Baofeng joined Huawei in 1998 and has over 18 years of working experience in the field of information technology. He has extensive experience in international/national standards organization activities. He was a reporter of the fixed telecomstasis group of the 13th International Research Group of the International Telecommunication Union. Deputy head of the Working Committee on Switching Technology.

|

At the June 100 meeting, Zhang Baofeng explained the three needs of the terminal intelligent future - understanding users, active services, lifelong learning, and three major pain points - end-side intelligence, product line measurement, deep learning. He said: "For the smart future of mobile terminals, let me talk about my cognition and understanding. You can see what is right and what is wrong." Perhaps his understanding and understanding is exactly A key to understanding the direction of the terminal intelligent industry. This speech + PPT sharing, you can click on the "AI scare index and the terminal wisdom of the future three major pain points" view.

Tao Jianhua: Voice interaction technology will be one of the most important access methods for mobile terminals.

Tao Jianhua, Ph.D., researcher, doctoral tutor. National Outstanding Youth Fund winner. He is currently the deputy director of the State Key Laboratory of Pattern Recognition, Institute of Automation, Chinese Academy of Sciences. He received his bachelor's and master's degrees in electronics from Nanjing University in 1993 and 1996, and his Ph.D. in computer science from Tsinghua University in 2001. He is currently serving as IEEE Trans. on Affective Computing Steering Committee Member, Vice President of ISCA SIG-CSLP, Executive Director of HUMAINE Institute, Executive Director of China Computer Society, Director of China Artificial Intelligence Society, Director of China Chinese Information Society, Director of China Acoustics Society, Responsible for the post of Secretary General of the Language Resources Construction and Management Committee of the Chinese Information Society. He has been responsible for and participated in more than 20 national-level projects (863 Key, National Natural Science Foundation, Development and Reform Commission, and Ministry of Science and Technology). He has served as a National Natural Science Foundation and 863 National Project Evaluation Expert. He has published more than 150 papers in SCI or EI journals or conferences, applied for 15 domestic invention patents, 1 international patent, and edited 2 academic works. The research results have won many awards at important academic conferences at home and abroad, and won the second prize of Beijing Science and Technology Progress Award twice. Member or chair of the program committee at prestigious academic conferences at home and abroad, including ICPR, ACII, ICMI, IUS, ISCSLP, NCMMSC, etc. He is currently a member of the Journal on Multimodal User Interface and the International Journal on Synthetic Emotions.

|

Artificial Intelligence 2.0 Five core technologies

Under the big concept of artificial intelligence, there are still many directions to explore.

A brief review of the history of artificial intelligence, the history of artificial intelligence technology has had several climaxes, and there have been several lows. After 2010, the combination of artificial intelligence technology and deep neural networks has indeed brought us great opportunities. Especially in recent years, what kind of connotation does the artificial intelligence 2.0 mentioned in the industry contain? Artificial Intelligence 2.0 is a new generation of artificial intelligence based on major changes in information new environment and development of new goals, including new environments, new targets, scalable new technologies, and many changes have taken place in research objects. The most important point here is that big data intelligence, cross-media intelligence, autonomous intelligence, human-machine hybrid enhancement intelligence, and group intelligence are important tasks for future development. These constitute the five core technologies of artificial intelligence 2.0. â€

Attention mechanism, memory ability, migration learning, reinforcement learning, semi-supervised unsupervised learning are the main focus of future development of artificial intelligence technology. Now we mainly see deep neural network methods. We believe that in the future development of artificial intelligence technology, many new learning methods will still get a lot of attention, such as general artificial intelligence technology. Now I can't think about it in the past. Now I can make some preliminary explorations. It is difficult to solve this problem in a limited short period of time, but preliminary exploration is possible.

Looking at the problem, the intelligence for big data is generally of concern to everyone. Especially in the strategic layout of the country, cloud computing and big data are all arranged in an independent direction. This related work is easy to understand, especially supporting a range of applications like smart transportation and smart city.

Cross-media intelligence is a new research content in artificial intelligence technology. There is now more and more data on Internet multimedia. Between the terminal and the cloud, it is difficult to say where the specific boundaries are, and more and more deeper integration. Text, image, voice, and video interactive properties will be closely intertwined to form cross-media features. How to use the semantically connected content to more closely integrate this person's different cross-media information, this is the cross-media intelligence problem that needs to be solved in the future artificial intelligence. This has many applications in Internet applications, as well as in many security areas.

There are also human-machine hybrids to enhance intelligence. The boundaries between man and machine in the future are beginning to blur. Human-machine hybrid enhances intelligence, one can enhance people's own ability, on the other hand, it can make a more advanced agent through close cooperation between the machine and the machine.

In terms of group intelligence, a variety of different agents are mixed together to build a higher level of group intelligence, which will become a new focus.

Autonomous intelligent systems involve intelligent technology and there is a lot of work to be done.

Looking at the general development of artificial intelligence 2.0 from three levels

The general development of artificial intelligence technology in artificial intelligence 2.0, we are divided into three major aspects, one is the basic support level, the second is the key technology level, and the third is the application scene level.

At the basic knowledge level, including all the smart sensors and chips related to artificial intelligence technology, including artificial intelligence, whether it is an accelerated chip for deep learning or a sensor chip - the sensor chip is to cure the common perceptual algorithm into the chip. Go, there are data resources and a basic support system composed of software-supported platform software systems.

Key technologies include machine learning, and machine learning includes deep learning. We now believe that deep learning is already a traditional approach. At the same time, it also includes intensive learning, confrontational learning and so on, as well as other key technologies such as vision, voice, image, human-computer interaction, big data, cloud computing and so on.

In the field of application, it can be seen that artificial intelligence continuously penetrates into different fields. Its applications include robots, smart driving, drones and a series of smart terminals for wearable devices. Recently, it is generally concerned with smart medical care. Smart security, smart finance, smart industry, etc., artificial intelligence technology may produce some large or breakthrough application points.

Intelligent terminal related technology - augmented reality technology, three-dimensional sound field technology, voice interaction technology

The form of smart terminals is very diverse. In the past few years, we have helmets or smart glasses in addition to the usual mobile phones and PADs. In the past, smart terminals have shipped very large quantities at home and abroad, and the market is very large. From the perspective of the entire intelligent terminal, with the development of intelligent technology in recent years, it has shown an explosive development trend. New wearable smart terminals are rapidly evolving and changing people's lives.

Augmented reality

In smart terminals, there are some very interesting applications, such as augmented reality technology. At present, we believe that it may become one of the important applications in smart terminals. What is the purpose? I use different wearable smart terminals or smart terminals of mobile phones to collect surrounding scenes and superimpose corresponding information through the camera or by voice. The corresponding information constitutes a different interpretation of the surrounding scene, and even more may use such scene picture information for positioning. Do you think positioning requires image information? It can be done by GPS. In fact, it can be located indoors or where GPS cannot cover it. Augmented reality technology has a lot of room for development in the future.

Three-dimensional sound field generation technology

There is also an interesting work for mobile terminals called 3D sound field generation technology. We used terminals in the past. There are often many people who plug in bicycles or walk with headphones. The music they listen to is stereo, but the stereo is actually not stereo. It’s just the control of the volume of the left and right ears. To coordinate the expression of sound effects, we also call this stereo, but in fact it only solves the problem of a flat sound field. Is it possible to produce a true three-dimensional sound field while listening to music or watching a movie, and using a pair of headphones instead of a surround sound system. The surround sound system is equipped with a lot of speakers in an environment, can produce such an effect, can I achieve this effect with a pair of headphones? This is also a very interesting job. We have done quite a good demo for this work. We can make music and vocals better according to the 360-degree range of people, including up and down, left and right, and the listener feels it. In the front, it's behind and behind, it's very different from the normal stereo.

Voice interaction technology

In the past, we have always said that voice interaction technology will be one of the most important access methods for mobile terminals. Our mainstream interactive methods are nothing more than several ways, touch, keyboard input, handwriting, and voice. There have been many technological changes in voice interaction in recent years. Voice technology has achieved very good capabilities both in terms of its recognition rate and the performance of the surrounding sound field for noise reduction. The access of voice technology is becoming more and more market-oriented. In the past, when people do speech noise reduction, it is better to use a multi-microphone system in mobile phones to achieve more effective hardware noise reduction.

Now with the deep learning method, it is possible to achieve better sound noise reduction with a single microphone. The development of artificial intelligence technology has solved many problems in the past, making the technology of voice interaction more and more robust.

Even so, we still have a lot of work to do without further completion, and today it is proposed for everyone to think about. The most typical is the three-dimensional sound field problem. The three-dimensional sound field simulates the human ear. The human ear has an auricle. The auricle is definitely not a display. It is because of the existence of the auricle that the sound comes from the front or from the back. . The three-dimensional sound field builds the model of the auricle through the earphones. It varies from person to person, and everyone is different. Personalization is not well solved.

In addition, in terms of voice interaction, it has just been mentioned that the speech recognition synthesis technology has greatly improved the voice interaction performance. Looking closely, there is still a lot of work in it. The speaker's voice is not too free, and it is now a little stronger than in the past.

Although the current speech recognition system can achieve a good degree, the recognized sound can not be too colloquial; second, the personalized processing is still not strong enough. Multi-language mixed speech recognition is also an important difficulty.

From the perspective of the combination of mobile terminals and artificial intelligence technologies, artificial intelligence and mobile terminals actually contain more aspects. In this case, we have made some preliminary explorations. The new work is a combination of deep learning and a combination of large corpora, in order to characterize or generate deeper parameter information in the process of human-computer interaction. There is still a lot of work in it that needs further work.

Time reasons, not one by one, today's report is here.

The work done by mobile terminals, whether it is augmented reality, personalized 3D sound field, emotional voice interaction, or precise 3D visual interaction, is a very interesting application scenario for future mobile terminal development. It cannot be said that mobile terminals must have such a Some techniques, but this is indeed a very interesting application scenario. This includes a lot of work, such as working on data interfaces. Mobile terminals, with the voice interaction and visual interaction just mentioned, can be used for many purposes in smart home and mobile office scenarios.

Sun Fuchun: Is artificial intelligence the "third apple" that changes the world?

Sun Fuchun is a professor of computer science and technology at Tsinghua University, a doctoral tutor, a member of the Academic Committee of Tsinghua University, the director of the Academic Committee of the Department of Computer Science and Technology, and the executive deputy director of the State Key Laboratory of Intelligent Technology and Systems. He is also a member of the National 863 Program Expert Group, a member of the National Natural Science Foundation's Major Research Program “Cognitive Computing of Audiovisual Informationâ€, and the Director of the Cognitive Systems and Information Processing Committee of the Chinese Society of Artificial Intelligence. Director of the Systems and Professional Committee, international publication "IEEE Trans. on Fuzzy Systems", "IEEE Trans. on Systems, Man and Cybernetics: Systems" "Mechatronics" and "International Journal of Control, Automation, and Systems (IJCAS)" Or editor-in-chief of the field, editorial board of the international journals "Robotics and Autonumous Systems" and "International Journal of Computational Intelligence Systems", editorial board of the domestic journal "Chinese Science: F" and "Journal of Automation".

|

Is artificial intelligence the "third apple" that changes the world?

Distinguished guests, everyone! I am very grateful to Xinzhiyuan for giving me this opportunity to communicate. Today's topic is artificial intelligence and robots in the cognitive era. Everyone defined 2015 as the first year of robots. Later, we saw that the first year of artificial intelligence was 2016, and IBM mentioned that 2016 is the beginning of the cognitive era.

What are the five most significant technologies affecting human society in the next five years? 2016 is visual, tactile, smelly, gustatory and auditory. Tsinghua has been working on visual processing and hearing since six years ago. A few days ago, Huawei proposed the era of touch. The touch is very important, especially during the operation of the robot.

When shopping online, the photos of the item always have the best perspective. Things get their hands and find that the texture and other aspects are not very good, which requires tactile help. We need to speak visually, and more is a semantic understanding. As explained earlier, vision is the most important part. People are the brains of vision. In addition, there are hearing and taste. How does the mother hear the child's appeal in the child's voice? Children under the age of 1 still can't talk. How does his language mean how to be understood by his mother? There is also the sense of smell, the ability to smell the disease and so on.

In the past, people and household appliances were also a one-way relationship with the goods. It is not easy to use my own test. After adding intelligence, the intelligent machine is formed. It has cognitive ability and can interact with you. People understand the machine, and the machine must understand people.

Yesterday, I interviewed Gong Ke, the president of Tianjin TV Station. I commented that the education in the cognitive era is two-way. In the past, it was one-way. The Ministry of Education formulated an outline. How can students graduate without passing the exam? In the era of intelligence, how is the outline determined, and the big data analysis of the situation of hundreds of thousands or even millions of students can judge whether the outline is correct or not? Many things in the cognitive era have become intelligent, and now they are within reach. In the past, when you can't stop at the restaurant, you have to wait in the car. Now you don't have to, the car is placed there, and the car electronic system can automatically detect it. Where there is a parking space, parking automatically passes. Beauty sees someone else's very beautiful package, you can open the online search to find out, where is the package, quality. Of particular importance, I visited the Australian National University last year. They did the first artificial retinal experiment on the blind. The blind saw the dark shadow of the object through artificial retina technology. There are also security fields. Everyone who leaves home to the unit and enters the first and second class cameras in Beijing will be recorded. Beijing has already achieved the license plate recognition. Wherever your car is driving, the Skynet system should be Can be detected. In the past few years, we undertook a multi-camera tracking project for a Japanese company. The system was developed to track the company's employees and even record the trajectories of the buildings in the building during the year as an indicator of his performance.

What is important for war is that the platform is cognitive. For example, in the United States, the bee colony drones use very small drones. The assembly of small drones requires strong communication and recognition technology.

The next generation of new concept combat weapons made by the United States has a very strong cognitive ability. American artificial intelligence is mainly driven by big companies. In fact, the word "intelligence" was first proposed in China. The scorpion said that "there can be a combination, that is, the ability," the cognitive ability is inherent in human beings; "knowing that there is a unity, that is, wisdom" through social practice, generating wisdom Innovation is also the intrinsic instinct of human beings. People are united, talents are generated in social practice, and cognitive ability is used to transform society. This is intelligence.

The ideological basis of artificial intelligence is very important. How to judge the machine is smart, I will not say more. The second important thing is the material basis, one is the computer and the other is the network.

The recent launch of 5G has also laid a very important foundation for the next step of artificial intelligence development. People's memory, especially experience-based cloud learning, is not feasible without network, including high-speed communication technology between U.S. If you follow the computing power of a thousand dollars, then the computer surpasses humans in 2040. If you follow the biological products, the floating point computing power provided in each memory unit, the machine will soon surpass humans.

Is artificial intelligence the "third apple" that changes the world? Adam and Eve were the first apples to change the world. The apple on the head of Newton was the second apple to change the world. The apple on the Turing table was the third apple to change society. The remarkable feature of the future era is that people and machines coexist, machines have intelligence, cognitive ability, and can interact with you. Only in this era, the third Apple era, the relationship between people and machines is two-way, in the past one way.

The next stage of artificial intelligence development is neural mechanism-driven brain cognition

The next stage of artificial intelligence development is neural mechanism-driven brain cognition. The human is the visual brain, from the eye to the last V1 area, up to the V4 area.

Now deep learning is layer by layer. There is no reverse connection between layers. There is a reverse connection between the layers and the same layer. Using this mechanism to transform the deep learning network will bring unexpected things. The work related to our lab made better results on the four data sets.

Reinforce learning. Google acquired DeepMind, and later did AlphaGo, using a single evaluation of the principles of deep learning and reinforcement learning and a comprehensive rating of the valuation network.

In addition, brain science research is inseparable from instruments. The electron microscope used by Harvard University in the United States can make 30-nanometer slice imaging. When mice play games, they can make slices by scanning, see the discharge of neurons, and identify it. coding. These instruments are important for the development of brain science and even future artificial intelligence.

In the past two days, over-limit learning has been hot. When I was a doctoral thesis, it was generally believed that the neural network was multi-layered, and the hidden layer parameters of neurons were to be learned. In 2013 and 2015, anatomy found that these hidden layer parameters are inherent to humans and animals and do not need to be learned. Later, Professor Huang Guangbin and others on this basis, through the random generation method to set the hidden layer parameters, proposed the method of over-limit learning machine, that is, over-limit learning. In the past two years, this work has been combined with multi-core learning and deep learning.

The development of robots. In the past, robots were more about studying the bones of robots. Today's robots not only need to study bones, but also have sensors, muscles, and human brains. Such robots are called cognitive robots, not only need to study it. The relationship between kinematics and dynamics is also to study how sensory information is sensed, how multimodal information is characterized and fused, and how muscle movement produces complex operations.

The combination of human and machine, the life-like robot is an important concept. At the cellular level, the research of living body materials may be the nemesis of cancer in the future, and may conquer cancer in blood vessels in the future.

In April 2016, the launch of the robot companion caused a lot of problems.

Our research team is also doing brain-controlled robots, and robots controlled by the brain can move off-site.

This is the third generation skin state robot we have made. At this year's Singapore International Robotics and Automation Conference, we gave a special invitation to the conference. Our understanding of artificial skin is not to make a skin patch to the injury. It is like a human hand with a skin and a leather. The epidermis is electronic, measuring texture, slippery, and measuring the positive pressure of the dermis. Much work has also been done on visual tactile coding, including their integration.

The development of robots relies on the development of artificial intelligence. Artificial intelligence is inseparable from the development of life science and brain science. A closed loop has been formed between the three.

The robot is precisely the carrier of artificial intelligence, and it is a golden partner. The ability to think and think, the next generation of robots will be reflected, and the artificial intelligence is to promote it. In the past, there was a three principles of machine. When artificial intelligence developed to this day, there has been some fear. Last year, more than 100 scientists in the United States discussed the future development of artificial intelligence. One of them is very important. Will artificial intelligence hurt in the future? Humanity? Artificial intelligence must have an objective function that evolves along with the development of human society.

IBM has proposed three principles of artificial intelligence: first, to establish a mutual trust relationship with the artificial intelligence system, it must trust people; second, transparency, understand what constitutes the artificial intelligence system, what kind of parameters are used to learn; third, artificial The intelligent platform works with people in the industry. This is a very important aspect of the future.

In the process of artificial intelligence development, the most terrible thing is that robots generate self-awareness. The understanding of consciousness now has various viewpoints such as memory, quantum entanglement and perception package.

Artificial intelligence should be the soul of the robot. The robot is the machine + person. What does the person use? artificial intelligence. With the development of artificial intelligence, machines are also constantly evolving. People and robots are two systems. People are life systems, robots are artificial systems, and artificial systems and living systems are always learning from each other in the development process. Artificial systems are an important experimental platform. The two systems are constantly evolving and learning from each other. One day they will interact, and the place where the meeting may take place is when I am aware of the machine.

Weak artificial intelligence is based on big data and deep learning. The representative type is AlphaGo. The disadvantages are high energy consumption and high resources. It is highly specialized and has a single function. AlphaGo can only play Go and can't play chess. It is a matter of fact, Intelligence under specific conditions, not scalable.

Strong artificial intelligence, universal artificial intelligence with human thinking characteristics. When people do not fully information, they will reason and judge. When Chairman Mao crosses Chishui, there are not many observation tools and decision-making tools. Why was Chairman Mao so successful at Sidu Chishui? First of all, he used his behavior according to the characteristics of the commander commanded by the other party. Secondly, he used the telegram to obtain local information and successfully commanded Sidu Chishui.

Parrots, a deep learning framework independently developed by Shangtang Technology, has implemented 1207 layers on ImageNet's classification task and used 26 GPUs. Do you still need to make 3,000 layers and 200 GPUs? Certainly not. Deep learning also makes mistakes, and the panda is wrongly identified, but people do not have such problems.

The important question in the middle is structural information. How do you dig these things? People have the ability in this area, which needs to be developed in the brain science.

The artificial intelligence industry, the future algorithm industry and the chip industry are very important.

thank you all!

Huang Wei: A for microphone array, B for speech recognition, C for natural speech, and the last product is a joke.

Dr. Huang Wei: Dr. Zhongkeda, a postdoctoral fellow at Shanghai Jiaotong University, worked as a senior researcher at the Motorola China Research Center after graduation. He developed the world's first mobile phone voiceprint authentication system. Later, he became the core executive of Shanda Innovation Institute and created a voice branch. At the end of 2013, he joined the domestic artificial intelligence to lead the CEO of Yunzhisheng, responsible for the cloud awareness development strategy and operational management strategic planning. Since 1999, he has been involved in project research and has achieved product achievements in various fields such as medical, management information systems, natural sciences, voice, games, etc., for example, participated in the National Institute of Standards and Technology's speaker recognition evaluation (NIST SRE) from 2002 to 2004. The project won the first place in the SRE main task and won the highest "Golden Star Award" of the year. It is also the only Chinese who can make a keynote speaker for two consecutive years in the NIST evaluation. He was nominated for MIT TR35 in 2007 and won the top ten leading talents in science and technology in Shanghai in 2009.

|

Artificial intelligence this year is not the same as last year. Last year, whether it was the media or the market, everyone was more concerned about the PR level. It is often seen that companies of all sizes said they got NO.1 on a certain evaluation. This year, we will basically not talk about such a story. Perhaps more attention is paid to what user value and business value can be created by his technology. Today is not the same as the previous two artificial intelligences. At that time, it was limited by conditions and lacked in many aspects. Today's artificial intelligence has shown the ability to crush people in both auditory and visual, including medical and financial scenarios. Three years later, within the global scope, the artificial intelligence industry may reach the scale of 100 billion US dollars, and the Chinese market is the fastest growing.

What value should smart home control provide to users?

Smart home is a very important scene in the field of artificial intelligence. Smart homes cover a wide range of areas, and they are the same in the real estate sector. There are only 16 real estate projects related to intelligence. From 2002 to 2016, there were only 16 projects. Last year, nearly 60 smart related projects were involved.

When we talk about smart homes, we can't ignore products like echo. They are naturally intelligently controlled, and there is no doubt that they are very likely to be the entry point for user information in the home appliance environment. Different people may have different views on this trend. One point of view is that user habits do not exist. Few families in China like to listen to music. Another point of view is, why do I control home appliances through speakers? I can control it with my phone. But think about it, when you get home, open the mobile APP switch light is not stupid?

Today, the amount of this product is not big. My first job was in Motorola. I have witnessed how Motorola and Nokia have fallen from the giants in Motorola for six years. I also witnessed how Apple grew from a small company to a global market today. The company with the highest value.

When Apple introduced its first-generation mobile phone in 2007, it sold more than 1 million units worldwide. Last year, Echo sold more than 5 million units.

How did Apple subvert Motorola and Nokia? A very important point is multi-touch, a completely different form of interaction that subverts Motorola and Nokia from the bottom.

Do I need to listen to music through the speakers? The focus of the debate is not here. Isn't the speaker not important? It can only be said that maybe Amazon had music resources at the time, so I chose the speaker form to carry its cloud services, such as Alexa. Apple and Google use the APP to replace the URL. Alexa replaces the app with a skill. I believe this is a trend, and people here will see its implementation after three years.

|

Google has Google Home, Apple released HomePod this year, and there are follow-up companies in China. The success of Apple's mobile phone is beyond the previous button phone in the user experience. The success of the app is more than the previous URL. What value should intelligent central control provide to users? It has the ability to control the connection of smart devices at home. This is the most basic. When it can't be connected and can't be controlled, the other intelligence is completely in the air.

|

能够æˆä¸ºå®¶é‡Œæ£å¸¸ç”Ÿæ´»çš„助ç†ï¼Œæ供必è¦çš„基本的æœåŠ¡ï¼Œæœ‰ä¸€äº›å¨±ä¹é™ªä¼´çš„功能,ä¸å…‰æ˜¯å·¥å…·åŒ–,还能拟人化,这是智能家居ä¸æŽ§éœ€è¦å…·å¤‡çš„å‡ ä¸ªè¦ç‚¹ã€‚

|

智能音箱的技术挑战

ç”¨ä»€ä¹ˆæ ·çš„äº¤äº’èƒ½åŠ›æ¥ä¼ 达这些价值?怎么能够让用户通过超越以å‰ä½“验的方å¼æ¥æ„Ÿå—è¿™ç§ä»·å€¼ä¼ 递?我们并ä¸æ˜¯è¯´åŽ»æ‰‹æœºåŒ–,去APP 化,而是应该在手机APP 之外补充其它的交互能力。

è¿™ç§è®¾å¤‡åº”该ä¸å—空间的é™åˆ¶ï¼Œå®ƒå¯èƒ½åœ¨ç¦»ä½ 有两米ã€ä¸‰ç±³ã€å››ç±³ã€äº”米甚至更远的地方,也能够åƒäººä¸€æ ·éšæ—¶å¾…机唤醒,åšåˆ°è¿œåœºè¯†åˆ«ï¼Œç”¨äººå’Œäººä¹‹é—´çš„è¯è¨€æ¥äº¤äº’。ä¸å…‰èƒ½å¬åˆ°ï¼Œè¿˜èƒ½å¬æ‡‚,ä¸å…‰å¬æ‡‚ï¼Œè¿˜èƒ½æŠŠä½ æƒ³çš„ä¸œè¥¿ç»™åˆ°ä½ ï¼Œè¿™æ˜¯ä¼ é€’å®¢æˆ·ä»·å€¼æ¯”è¾ƒå…³é”®çš„ç‚¹ã€‚

ç†æƒ³å¾ˆä¸°æ»¡ï¼ŒçŽ°å®žå¾ˆéª¨æ„Ÿï¼Œç›¸å…³æŠ€æœ¯å¾ˆéš¾å®žçŽ°ï¼Œå¾ˆå°‘能è§åˆ°è®©ç”¨æˆ·æ»¡æ„的产å“。我们拿到Echo 之åŽï¼Œå‘现它比较æ»æ¿ï¼ŒçŽ°å®žå’Œæˆ‘们的想法还是有很大差è·çš„。å‰æ®µæ—¶é—´ç½‘ä¸Šæœ‰ä¸€ç¯‡æ–‡ç« ï¼Œå«ã€Šåæ¥ï¼Œæ™ºèƒ½éŸ³ç®±ä»Žå…¥é—¨åˆ°æ”¾å¼ƒã€‹ã€‚

当我们æ„识到我们愿æ„å°è¯•Alexaã€åº¦ç§˜è¿™ç§äº§å“的时候,第一æ¥OK,第二æ¥ã€ç¬¬ä¸‰æ¥å‘现太难åšäº†ï¼Œå¯¹å¾ˆå¤šå…¬å¸æ¥è¯´è¿™æ˜¯ä¸å¯èƒ½çš„事情,åªå¥½æ”¾å¼ƒã€‚

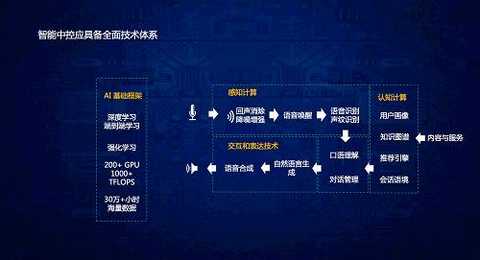

今天很多人已ç»ç”¨äº†è¯éŸ³è¾“入法,智能音箱ä¸å°±æ˜¯æ‹¿ä¸ªéŸ³ç®±ï¼ŒæŽ¥ä¸ªSDKä¸å°±å®Œäº†å—?ä¸æ˜¯çš„,这里é¢åŒ…å«å¤ªå¤šçš„技术环节,包括回声消除ã€é™å™ªã€è¯éŸ³å”¤é†’ã€è¯éŸ³è¯†åˆ«ï¼ŒåŒ…æ‹¬äº‘ç«¯è¯†åˆ«ï¼Œä¹ŸåŒ…æ‹¬ä½ŽåŠŸè€—çš„æœ¬åœ°è¯†åˆ«ï¼Œæ ¹æ®ç”¨æˆ·å–œæ¬¢ã€ç”¨æˆ·ç”»åƒã€çŸ¥è¯†å›¾è°±ã€æŽ¨è引擎包括整个对è¯é€»è¾‘以åŠæœ€åŽç”¨é«˜è¡¨çŽ°åŠ›å¾ˆè‡ªç„¶çš„åˆæˆæ–¹å¼ç»™ç”¨æˆ·å馈出æ¥ï¼Œè¿™é‡Œé¢æ¯ä¸ªç‚¹éƒ½å¯ä»¥æˆå°±ä¸€ç¯‡éžå¸¸ä¼Ÿå¤§çš„åšå£«è®ºæ–‡ã€‚对公å¸æ¥è¯´ï¼Œæžå‡ 个åšå£«ç‚¹æ怕ä¸æ˜¯é‚£ä¹ˆç®€å•ã€‚

技术一定è¦ç«¯åˆ°ç«¯æ‰“通,我们æ出AI 集æˆåŒ–的概念。有很多的技术并ä¸æ˜¯å¤ç«‹çš„,æ¯ä¸ªæŠ€æœ¯ä¹‹é—´ä¸æ˜¯é»‘ç›’å,一定è¦æ·±å…¥æ‰“通æ‰èƒ½å¾—到最终比较好的体验。

业内有人æ出,在移动互è”网的今天,我们所说的

AI 产å“ç»ç†ï¼Œå’Œä»¥å‰çš„产å“ç»ç†å®Œå…¨ä¸ä¸€æ ·ï¼Œä»Šå¤©çš„人工智能产å“ç»ç†ä¸€å®šè¦ç²¾é€šæŠ€æœ¯ï¼ŒçŸ¥é“æ¯ä¸ªæŠ€æœ¯çš„优点和缺点。ä¸æ˜¯å…‰æœ‰ç®—法就够了,还需è¦éº¦å…‹é£Žé˜µåˆ—技术ç‰ç‰ï¼Œè·Ÿæ™ºèƒ½å®¶å±…ä¼ä¸šæ‰“通。æ£å¦‚之å‰æˆ‘ä»¬éƒ½ä¹ æƒ¯äº†GUI,基于åŒä¸€ç•Œé¢ï¼ŒGUI 怎么设计,怎么跟设备互动,逻辑怎么设计?包括对接大é‡çš„第三方资æºï¼ŒæŒæ›²çš„ã€éŸ³ä¹çš„ã€å¤©æ°”çš„ã€è‚¡ç¥¨çš„ç‰ç‰ï¼Œæ¯ä¸ªçŽ¯èŠ‚都很难åšï¼Œéƒ½å¾ˆé‡è¦ã€‚智能音箱ä¸æ˜¯æŽ¥ä¸€ä¸ªè®¯é£žæˆ–者è¯éŸ³SDK å°±OK了。

今天有很多人会想,è¯éŸ³è¯†åˆ«ç”¨ä¸€å®¶çš„技术,麦克风阵列用一家的技术,其它技术å†é€‰ç”¨ä¸€å®¶ï¼Œä¸²èµ·æ¥ä¸å°±å¯ä»¥äº†å—?这个想法是ä¸çŽ°å®žçš„。安é™çŽ¯å¢ƒä¸‹å’Œå®¶é‡Œå¼€ç€ç”µè§†æœºçš„环境下,è·ç¦»åˆ†åˆ«1ç±³ã€3ç±³ã€5ç±³ï¼Œç§‘èƒœè®¯æ— è®ºåœ¨å®‰é™è¿˜æ˜¯å™ªéŸ³çŽ¯å¢ƒä¸‹ï¼Œæ— 论1ç±³ã€3ç±³ã€5ç±³ï¼ŒæŒ‡æ ‡éƒ½å¾ˆç¨³å®šã€‚æ€Žä¹ˆåšåˆ°çš„?用ä¸åŒåŽ‚商的麦克风阵列对接BAT 自己的识别引擎,科胜讯的“ä¸å¥½â€éžå¸¸ç¨³å®šï¼Œåªæœ‰ç™¾åˆ†ä¹‹å…åå‡ ï¼Œæ‡‚è¡Œçš„äººéƒ½èƒ½å¤Ÿçœ‹å‡ºæ¥ã€‚这说明科胜讯很稳定。但是科胜讯的技术ä¸æ˜¯ä¸ºäº†è¯†åˆ«åšçš„,而是为了笔记本电脑上的通è¯è´¨é‡åšçš„,这ç§å¤ç«‹çš„模å—完全ä¸è¡Œã€‚国内æŸäº›å…¬å¸è‡ªå·±åšäº†éº¦å…‹é£Žé˜µåˆ—,去对接BAT çš„è¯†åˆ«å¼•æ“Žï¼Œæ•ˆæžœä¸€æ ·å·®ï¼Œç”šè‡³ä¸å¦‚科胜讯。

我们å†çœ‹ä¸€ä¸‹è®¯é£žåšçš„或者云知声åšçš„,我们很好地把麦克风阵列和AI æŠ€æœ¯ç«¯åˆ°ç«¯æ‰“é€šï¼Œæ€§èƒ½æŒ‡æ ‡ä¸Šç¢¾åŽ‹å¼åœ°è¶…越它们,所以说

AI 技术一定è¦èŠ¯ç‰‡åŒ–。å‰ä¸ä¹…国内厂商å‘å¸ƒäº†éŸ³ç®±ï¼Œè¿˜æ˜¯ä¼ ç»Ÿäº’è”网产å“ç»ç†çš„æ€ç»´ï¼Œéº¦å…‹é£Žé˜µåˆ—用A,è¯éŸ³è¯†åˆ«ç”¨B,自然è¯éŸ³ç†è§£ç”¨C,最åŽçš„产å“就是一个笑è¯ã€‚最åŽçš„产å“ä¼šè®©ä½ å´©æºƒã€‚åªè¦éŸ³ç®±ä¸€æ”¾éŸ³ä¹ï¼Œ2米之外è¦é å¼ï¼›æ”¾éŸ³ä¹çš„è¯ï¼Œ1ç±³åŠä»¥å†…基本唤ä¸é†’或者唤醒率最多5%ã€10%。把Aã€Bã€C 三个厂商的技术æ在一起,åŽæžœå°±æ˜¯è¿™æ ·ã€‚

我们æ出ä¸æŽ§è§£å†³æ–¹æ¡ˆPandora,希望解决现在讲的这些困境,把麦克风阵列技术ã€AI技术ç‰æ‰€æœ‰çš„技术端到端打通,解决å‰é¢è¯´çš„行业问题。我们集æˆäº†4MIC 阵列é™å™ªï¼Œ5米远场è¯éŸ³è¯†åˆ«ï¼Œç»§æ‰¿äº†Echoã€Google Homeã€HomePod ç‰éŸ³ç®±çš„特点,åŒæ—¶å…·å¤‡äº†å¾ˆå¤šå®ƒä»¬æ²¡æœ‰çš„特点。除了智能化æœåŠ¡ä¹‹å¤–,还有一个很é‡è¦çš„技能——连接控制家里é¢æ‰€æœ‰è®¾å¤‡æ—¶ï¼Œæˆ‘们对这些设备有一个最基本的è¦æ±‚就是速度。试想家里一个空调摇控器,按一下按纽,一两秒钟æ‰ååº”ï¼Œä½ ä¸çŸ¥é“按了之åŽæœ‰æ²¡æœ‰å应,也许连ç€ä¸‰ä¸‹ï¼Œæœ€åŽä¹Ÿä¸çŸ¥é“开还是关了,按一下没å应,å†æŒ‰ä¸€ä¸‹ä¸çŸ¥é“那个状æ€æ˜¯å¼€è¿˜æ˜¯å…³ã€‚一个机器人,也许它有éžå¸¸å¼ºå¤§çš„云端智能能力,但是å应特别迟é’,怎么办?它一定会让用户崩溃。我们æ出一定è¦å…·å¤‡ç¬¬ä¸€ç‚¹ï¼ŒPandora的所有系统通过云端æ供认知和智能æœåŠ¡ï¼ŒåŒæ—¶æ”¯æŒç»ˆç«¯çš„AI交互,以åŠåœ¨èŠ¯ç‰‡ç»ˆç«¯æ„ŸçŸ¥å’Œæœ¬åœ°æ™ºèƒ½ã€‚

支撑Pandora çš„æŠ€æœ¯ï¼Œç¬¬ä¸€æ˜¯å¿«ã€‚å¤šå¿«ï¼Ÿé—ªç”µä¸€æ ·å¿«ã€‚æˆ‘ä»¬Pandora 实现的技术能力唤醒时间å°äºŽ0.3秒。云端å“应速度å°äºŽ1 秒。ä¸å…‰æ˜¯è¯´è¯†åˆ«çš„å应速度,也包括一系列环节,包括云端识别,包括ç†è§£ï¼ŒåŒ…括知识图谱,包括æœåŠ¡å¬å›žï¼Œå¿…é¡»è¦çœŸçš„从互è”网产å“ç»ç†çš„角度æ¥æ‰“磨这个技术。

第二,准。è¦èƒ½éžå¸¸å‡†ç¡®åœ°ç†è§£ç”¨æˆ·åœ¨è¯´ä»€ä¹ˆã€‚实际上到今天为æ¢ï¼Œå½±å“我们很多产å“è½åœ°çš„一个很é‡è¦çš„åŽŸå› ï¼Œæ˜¯å¾ˆå¤šæŠ€æœ¯æŒ‡æ ‡åªèƒ½å±€é™äºŽå®žéªŒå®¤çŽ¯å¢ƒé‡Œé¢ï¼Œå®ƒå¯ä»¥æ‹¿æ ‡å‡†çš„æ•°æ®åº“跑到97%ã€98%的准确率,但在工业环境里é¢å´ä¸€ç‚¹ä»·å€¼éƒ½æ²¡æœ‰ã€‚

除了è·ç¦»è¿œä¹‹å¤–,å£éŸ³ä¹Ÿæ˜¯ä¸ªå¤§æŒ‘战。云知声今天å‡å€Ÿéº¦å…‹é£Žé˜µåˆ—和识别技术,能åšåˆ°æˆä¸ºå›½å†…工业界唯一é‡äº§å‡ºè´§çš„åŽ‚å•†ï¼Œæ²¡æœ‰ä¹‹ä¸€ï¼Œæ˜¯å”¯ä¸€ã€‚æ— è®ºè·Ÿå›½å†…å“ªä¸€å®¶åŽ‚å•†PK,我们唯一能åšåˆ°ï¼Œç›´æŽ¥æ‰¾å¸¦æœ‰å£éŸ³çš„被测试人过æ¥ï¼Œä¸éœ€è¦åŸ¹è®ï¼Œä¸ç”¨æ•™ä»–æ€Žä¹ˆè¯´ï¼Œå› ä¸ºç”¨æˆ·æœ¬èº«ä¸çŸ¥é“怎么说;第二,他会直接把空调的风é‡å¼€åˆ°æœ€å¤§ã€‚第三,直接说方言。产å“å¼€å‘的时候会é‡åˆ°å¾ˆå¤šå›°éš¾ï¼Œäº§å“想è¦é‡äº§çš„è¯ï¼Œæ–¹è¨€å¿…é¡»è¦è§£å†³ã€‚

工业é‡äº§è¿˜æœ‰ä¸€ä¸ªå¾ˆé‡è¦çš„æŒ‡æ ‡æ˜¯çœã€‚很多公å¸å›¢é˜Ÿåšä¸€äº›PR 产å“,性能还å¯ä»¥ï¼Œä¸Šæ¥æžä¸€ä¸ª4 æ ¸CPUï¼Œå‡ ä¸ªG 的内å˜ï¼Œæ— 法é‡äº§ã€‚云知声æ出æ¥ä¸€ä¸ªè§‚点——一定è¦çœã€‚最低主频低于100兆;第二,内å˜å°äºŽ100K å—节。

还有一件é‡è¦çš„äº‹æƒ…æ˜¯ç¨³ã€‚ä½ åœ¨å®¶é‡Œç¡ç€äº†ï¼ŒéŸ³ç®±çªç„¶ç»™ä½ è®²é¬¼æ•…äº‹ï¼Œè¿™æ ·çš„äº§å“是ç»å¯¹ä¸è¡Œçš„。è¦åšåˆ°ä½ å«å®ƒçš„时候它一定会ç”åº”ä½ ï¼Œä¸å«çš„时候ç»å¯¹ä¸ç”应。

用户也å¯ä»¥ä¸Žæˆ‘们的设备ä¿æŒå¤šè½®å¯¹è¯ï¼Œå¹¶åœ¨äº¤äº’ä¸éšæ—¶æ‰“æ–,设备都å¯ä»¥çµæ´»åº”对,实现如水般顺畅的æµå¼äº¤äº’。除了多轮对è¯ï¼Œä»Šå¹´ç³»ç»Ÿåˆæ”¾å…¥äº†ç™¾ç§‘知识,机器人ä¸ä»…是助ç†è¿˜æ˜¯ä¸“家,对用户有更深入的ç†è§£å’ŒæŽŒæ¡ã€‚它会在使用过程ä¸ä¸æ–å¦ä¹ ä½ äº†è§£ä½ ã€‚æˆ‘ä»¬çš„è®¾å¤‡è¿˜æœ‰ç”·å£°å¥³å£°å’Œç«¥å£°ï¼Œå“ªæ€•åªæœ‰10分钟的数æ®éƒ½å¯ä»¥ç”Ÿæˆé«˜è¡¨çŽ°åŠ›çš„声音。

通过ä¸æŽ§æ–¹æ¡ˆï¼Œå³ä½¿ä¸æŽ§è®¾å¤‡æœ¬èº«æ²¡æœ‰å±å¹•ï¼Œä¹Ÿå¯ä»¥æŠŠå®¶é‡Œæ‰€æœ‰å±å¹•éƒ½ç”¨èµ·æ¥ï¼Œåšåˆ°æµå¼å¯¹è¯ï¼Œè®©æ‰€æœ‰çš„ç”¨æˆ·è¡Œä¸ºä¹ æƒ¯åœ¨å„ä¸ªè®¾å¤‡ä¹‹é—´æ— ç¼æµåŠ¨ã€‚我们的方案把所有åˆä½œä¼™ä¼´çš„周期压缩到6个月以内,而且å„个设备都å¯ä»¥ä½¿ç”¨ã€‚

ä¸è¡£ï¼šå¾ˆå¤šæœºå™¨äººäº§å“把边界过度放大,这ä¸ä¼šå¸¦æ¥çœŸæ£çš„销售和å£ç¢‘å˜åŒ–

ä¸è¡£ï¼Œå‰æžè·¯ç”±ã€å¤§è¡—网的è”åˆåˆ›å§‹äººã€‚在å“牌è¥é”€å’Œæ¸ é“销售方é¢æ‹¥æœ‰ä¸°å¯Œçš„ç»éªŒå’Œæ´žå¯Ÿã€‚ç›®å‰ï¼Œè´Ÿè´£ç‰©çµæ•´ä½“的市场销售和è¿è¥ä½“ç³»ï¼Œè‡´åŠ›äºŽå¡‘é€ ä¸€ä¸ªä¸–ç•Œçº§çš„çµæ€§å“牌,让物çµçš„产å“èµ°å‘世界å„地。

|

物çµç§‘技是一个新创立的人工智能科技公å¸ï¼Œæ˜¯ç”±ä¸Šå¸‚å…¬å¸ä¸œæ–¹ç½‘力投资的,我们的定ä½éžå¸¸æ¸…楚,就是åšæ¶ˆè´¹è€…å“牌,åšæ¶ˆè´¹è€…产å“,而产å“主è¦æ˜¯äººå·¥æ™ºèƒ½çš„机器人产å“。

我们éžå¸¸é‡è§†äº§å“定义,åšå¥½äº¤äº’和体验,这个对我们æ¥è®²éžå¸¸é‡è¦ã€‚现在所有的消费者智能类的产å“,除了Echo,销é‡éƒ½ä¸å¥½ã€‚而我们è¦æ ¹æ®åœºæ™¯å’Œéœ€æ±‚æ¥ç»†åŒ–产å“定义,åšæœ‰å®žé™…价值的产å“。现在市é¢ä¸Šå¾ˆå¤šæœºå™¨äººäº§å“把边界过度放大,对消费者æ¥è®²ï¼Œæ高了消费者的期望值,拿到以åŽï¼Œè½å·®éžå¸¸å¤§ï¼Œä¸ä¼šå¸¦æ¥çœŸæ£çš„销售和å£ç¢‘å˜åŒ–。所以,我们认为如何定义产å“本身和控制消费者预期很é‡è¦ã€‚

人目å‰éƒ½æ˜¯é€šè¿‡æœºå™¨æ¥å¯¹æŽ¥ä¿¡æ¯æµå’ŒæœåŠ¡æµçš„,这是通过人和设备之间的交互完æˆï¼Œæˆ‘ä»¬çš„æ ¸å¿ƒæŠ€æœ¯ä¼šä¸“æ³¨åœ¨äººæœºäº¤äº’è¿™ä»¶äº‹æƒ…ã€‚

最终的智慧æ¥è‡ªäºŽäººå’Œæœºå™¨çš„共生的能力,共åŒè¿›åŒ–,我们希望交互的方å¼ä»Žé”®ç›˜åˆ°é¼ æ ‡GUI 时代,å†åˆ°touch 时代,å†åˆ°çŽ°åœ¨å¹¶æ²¡æœ‰å®Œå…¨å®šä¸‹æ¥çš„BCI。大部分人现在会沉浸在touch 终端。所有注æ„力都在å±å¹•ä¸Šï¼Œè€Œæˆ‘们è¦åšçš„设备是é™é»˜å¼çŽ¯ç»•å¼çš„设备,这将是一ç§æ— 处ä¸åœ¨çš„智能化和计算力。

对于æœåŠ¡äºŽå®¶åºçš„机器人æ¥è¯´ï¼Œå®¶åºé‡Œé¢çš„两类人群——æˆå¹´äººå’Œæœªæˆå¹´äººâ€”—认知方å¼å’Œè¯è¨€ä½“系完全ä¸ä¸€æ ·ã€‚

我们分æˆå¹´äººçš„产å“和未æˆå¹´äººçš„产å“。我们对产å“的具体使用场景ã€å…·ä½“功能产å“的定义åšäº†å¾ˆå¼ºç¡¬çš„é™å®šï¼Œè¿™æ ·çŽ°åœ¨çš„AI 技术边界ä¸ä¼šè¾¾ä¸åˆ°ã€‚最近我们æ£åœ¨æ‹›å‹Ÿæˆ‘们第一款产å“的天使用户,是一款儿童阅读养æˆæœºå™¨äººLuka,用计算机视觉技术å¯ä»¥è¯»å¸‚é¢ä¸Šçš„绘本书,在京东上æ£åœ¨é¢„约,大家å¯ä»¥åŽ»äº†è§£ä¸‹ã€‚

å¦å¤–,我们è”åˆäº†å›½å†…的三家上市公å¸ã€ä¸€å®¶åŸºé‡‘还有三家AI åˆåˆ›å…¬å¸ä¸€èµ·æˆç«‹äº†ä¸‡è±¡äººå·¥æ™ºèƒ½ç ”ç©¶é™¢ï¼Œå¸Œæœ›æŠŠåº•å±‚çš„ç®—æ³•å’ŒæŠ€æœ¯èƒ½å¤Ÿè·Ÿäº§ä¸šç›´æŽ¥å¯¹åº”ï¼Œç ”ç©¶å‘˜ä¸€å¼€å§‹ç ”ç©¶çš„æ—¶å€™å°±çŸ¥é“è°æ¥ç”¨ï¼Œæ€Žä¹ˆç”¨ã€‚æˆ‘ä»¬çš„ç ”ç©¶é™¢æ˜¯åŸºé‡‘æ¨¡å¼çš„,并且是全çƒåŒ–è¿è¥ï¼Œç´§å¯†è¿žæŽ¥äº§ä¸šçš„。

我们物çµç§‘技的新Office 在望京的浦项ä¸å¿ƒé¡¶å±‚,还é…备了专业的咖啡馆,å¯ä»¥è¿›è¡Œç™¾äººå·¦å³çš„å‘布会,风景éžå¸¸å¥½ï¼Œå¸Œæœ›åšæˆäººå·¥æ™ºèƒ½æ¶ˆè´¹çº§å“牌的体验厅ã€å±•ç¤ºåŽ…和大家èšä¼šçš„场所,欢迎下次新智元的百人会æ¥æˆ‘们新的Office 举办。

Panel:对现有智能音箱产å“的分æžï¼ŒåŠå¯¹å›½å†…智能音箱市场的展望

æ¨é™ï¼šä»Šå¤©æˆ‘们Panel 的主题是《对现有智能音箱产å“的分æžï¼ŒåŠå¯¹å›½å†…智能音箱市场的展望》。6 月åˆçš„苹果开å‘者大会上,智能音箱Apple Homepod é¢ä¸–,æˆä¸ºäºšé©¬é€ŠEcho 和谷æŒHome 的劲敌。包括海尔在内的国内多家厂商也已ç»æˆ–者å³å°†äºŽè¿‘期推出自己的智能音箱,BAT也有æ„或已ç»å…¥å±€ã€‚战局背åŽï¼Œæ˜¯äººæœºäº¤äº’技术å‘展的驱动和市场对新一代人机交互界é¢çš„真实需求。更自然的人机交互方å¼æ˜¯æ™ºèƒ½æ—¶ä»£çš„é‡è¦ç‰¹å¾ä¹‹ä¸€ã€‚以è¯éŸ³è¯†åˆ«ã€è¯ä¹‰åˆ†æžã€è§†è§‰èŽ·å–ã€ä¸Šä¸‹æ–‡æ„ŸçŸ¥ã€VR ç‰ç‰æŠ€æœ¯ä¸ºå†…æ ¸çš„æ–°ä¸€ä»£äººæœºäº¤äº’ç•Œé¢ï¼Œå°†æˆä¸ºæ™ºèƒ½å®¶å±…ã€è‡ªåŠ¨é©¾é©¶ç‰ç»ˆç«¯æ™ºæ…§åŒ–应用场景下直接决定用户体验的关键模å—。今天想请在座的å„ä½ä¸“家èŠä¸€èŠï¼Œæ™ºèƒ½éŸ³ç®±çš„技术挑战在哪里?ä¸å›½çš„智能音箱ä¸ï¼Œæœ‰æ²¡æœ‰å“ªæ¬¾èƒ½åšåˆ°æŽ¥è¿‘Echo 的水准?近期ä¸å›½èƒ½å¦æŽ¨å‡ºä¸€ç§åœ¨å¸‚场上能够形æˆä¸»å¯¼åœ°ä½çš„ã€æˆ–者至少被消费者所广泛接å—çš„éŸ³ç®±ï¼Ÿæˆ‘ä»¬é¦–å…ˆæœ‰è¯·å¼ å®å³°éƒ¨é•¿ç»™æˆ‘们分享一下。

å¼ å®å³°ï¼šå®¶åºé‡Œé¢ä¼šå˜åœ¨ä¸€ä¸ªæ™ºèƒ½å…¥å£ï¼Œåœ¨æœªæ¥çš„å‘å±•é‡Œï¼Œè¿™æ˜¯æ¯«æ— ç–‘é—®çš„ã€‚Facebook 最新开å‘äº†è´¾ç»´æ–¯ï¼Œä¹Ÿæ˜¯ä¸€æ ·çš„æ•ˆæžœï¼Œäº¤äº’æ˜¯ä¸æ˜¯ä»¥éŸ³ç®±çš„å½¢æ€å‡ºçŽ°ï¼Œä¸ä¸€å®šã€‚éžå¸¸é‡è¦çš„事情是约æŸåœºæ™¯ã€‚我看过Echo çš„è°ƒç ”ï¼Œæœ‰å‡ ä¸ªTOP 应用,比如å¬éŸ³ä¹ã€è®¾é—¹é’Ÿï¼Œæœ‰äº›åº”用使用的比例éžå¸¸ä½Žã€‚ 我们到底是åšå¹¿åšå…¨ï¼Œè¿˜æ˜¯çœŸæ£åšå‡ºç‰¹å®šä»·å€¼ï¼Ÿè¿™æ˜¯éžå¸¸å€¼å¾—æ€è€ƒçš„问题。

æ¨é™ï¼šèµµå³°æ€»æœ‰æ²¡æœ‰çœ‹å¥½çš„产å“?

赵峰åšå£«ï¼Œæµ·å°”家电产业集团副总è£å…¼CTOï¼Œæ›¾æ‹…ä»»å¾®è½¯äºšæ´²ç ”ç©¶é™¢å¸¸åŠ¡å‰¯é™¢é•¿ï¼Œä¸»è¦è´Ÿè´£ç‰©è”网ã€å¤§æ•°æ®ã€è®¡ç®—机系统åŠç½‘络ç‰é¢†åŸŸçš„ç ”å‘工作。赵峰åšå£«æ¯•ä¸šäºŽéº»çœç†å·¥å¦é™¢(MIT) 计算机系åŠäººå·¥æ™ºèƒ½å®žéªŒå®¤ï¼Œæ›¾åœ¨ä½äºŽç¡…è°·çš„Xerox PARC担任首å¸ç§‘å¦å®¶ï¼Œåˆ›ç«‹äº†è¯¥ä¸å¿ƒçš„ä¼ æ„Ÿå™¨ç½‘ç»œç ”ç©¶ï¼Œå¹¶å…ˆåŽä»»æ•™äºŽç¾Žå›½ä¿„亥俄州立大å¦å’Œæ–¯å¦ç¦å¤§å¦ã€‚èµµåšå£«æ˜¯ç¾Žå›½ç”µæœºç”µå工程师å¦ä¼šIEEE Fellow,撰写了物è”网领域第一本专著《Wireless Sensor Networks》,被多所美国大å¦é€‰ä¸ºæ•™ç§‘书。

|

赵峰:对智能音箱æ¥è¯´ï¼Œå®žé™…上更é‡è¦çš„是背åŽçš„è¯éŸ³åŠ©æ‰‹ã€‚大家接下æ¥æ›´éœ€è¦å…³æ³¨çš„,是智能音箱背åŽæ•´ä¸ªè¯éŸ³æœåŠ¡ç”Ÿæ€ä½“系,它的硬件展现方å¼å¯èƒ½æ˜¯åœ¨éŸ³ç®±ä¸Šï¼Œä½†æˆ‘认为,家里任何一个智能硬件都å¯ä»¥å½“æˆå…¥å£ï¼Œæ™ºèƒ½éŸ³ç®±è¿™ä¸ªæ¦‚念需è¦æ³›åŒ–,而ä¸æ˜¯ç®€å•çš„音箱形æ€ã€‚

现在大家对人工智能的期望远远高于技术能够实现和æ供给用户的体验,这一点我éžå¸¸æ‹…心。看了æ¨é™æ€»å…³äºŽ2016 å¹´äººå·¥æ™ºèƒ½çš„è°ƒç ”æŠ¥å‘Šï¼Œé‡Œé¢è®²åˆ°äººå·¥æ™ºèƒ½ä¸‰èµ·ä¸‰è½ï¼Œæˆ‘希望这次第三次ä¸è¦å†è½ä¸‹åŽ»ã€‚å‰é¢äººå·¥æ™ºèƒ½ä¸‰èµ·äºŒè½ï¼Œç¬¬ä¸€æ³¢æ˜¯åˆšå¼€å§‹çš„符å·è¿ç®—专家系统,第二波是神ç»ç½‘络,那时候没有大规模è¿ç®—和数æ®çš„支撑,大家的期望值和现实之间产生了è½å·®ã€‚这次第三波ä¸ä¸€æ ·ï¼ŒåŸºäºŽæ·±åº¦å¦ä¹ ,大数æ®å’Œå¤§è§„模计算,è¯éŸ³è¯†åˆ«å’Œå›¾åƒè¯†åˆ«åœ¨æœ‰äº›é¢†åŸŸå·²ç»èƒ½å¤Ÿè¾¾åˆ°ä½“验上的一个阈值,大家能够接å—è¿™æ ·çš„ä½“éªŒã€‚ä»¥å‰ä¸èƒ½è¾¾åˆ°è¿™ä¸ªé˜ˆå€¼ï¼Œåå¥è¯é‡Œé¢æœ‰ä¸‰å¥è¯è®¡ç®—机是识别错的,大家感觉就éžå¸¸å·®ã€‚在泛化的人工智能ä¸ï¼Œç‰¹åˆ«æ˜¯æ²¡æœ‰é™åˆ¶çš„对è¯å½“ä¸ï¼Œè¦èƒ½å¤Ÿéžå¸¸æµç•…地åƒäººä¸€æ ·è‡ªç„¶äº¤äº’,现在还ä¸èƒ½åšåˆ°ã€‚我们è¦èšç„¦åœ¨å‡ 个垂直领域,把体验åšå¥½ã€‚比如在家åºåœºæ™¯é‡Œï¼ŒæŠŠç”¨æˆ·ä½“验真æ£åšåˆ°æžè‡´ï¼Œä½¿å¯¹è¯èƒ½å¤Ÿæµç•…,ä¸ç®¡æ˜¯è¿žç»è¯´ï¼Œè¿˜æ˜¯å¤šè½®å¯¹è¯ï¼Œè¿˜æ˜¯èƒŒåŽçŸ¥è¯†åº“的支æŒï¼Œéƒ½èƒ½å¤Ÿå»ºå…¨ï¼Œç”¨æˆ·èƒ½çœŸæ£å¾—到她需è¦çš„æœåŠ¡ã€‚如果现在想åšä¸€ä¸ªç±»äººæœºå™¨äººï¼Œè¿½æ±‚什么都懂,知识é¢åƒäººä¸€æ ·å…¨é¢ï¼ŒçŽ°åœ¨è¿˜æ²¡åˆ°é‚£ä¸ªæŠ€æœ¯æ°´å¹³ï¼Œè€Œä¸”还会把人工智能带入æ»èƒ¡åŒï¼Œæˆ‘ä¸å¸Œæœ›çœ‹åˆ°é‚£ä¸ªç¬¬ä¸‰ä¸ªâ€œè½â€å‡ºçŽ°ã€‚大家的期望是,在现阶段把智能音箱体验åšå¥½ï¼Œé¢†åŸŸæ›´èšç„¦ä¸€ç‚¹ï¼Œæ»¡è¶³ç”¨æˆ·çš„刚需。å¬éŸ³ä¹æ˜¯åˆšéœ€ï¼Œæ™ºæ…§ç”Ÿæ´»ã€æ™ºæ…§å®¶åºé‡ŒéŸ³ç®±ä½œä¸ºç”¨æˆ·äº¤äº’人å£ï¼Œä½œä¸ºæ™ºæ…§å®¶åºä¸€ä¸ªä¸æŽ§ï¼Œå’Œå„ç§æ™ºèƒ½ç¡¬ä»¶äº’è”互通,通过交互获å–æœåŠ¡ï¼Œä¹Ÿæ˜¯ä¸€ä¸ªåˆšéœ€ã€‚但如果çŸæœŸå†…æœŸæœ›å€¼æ— é™é«˜çš„è¯ï¼Œå¯¹æ•´ä¸ªä¸šç•Œçš„æŒç»å‘展实际上是负é¢çš„。

我就说这两点,第一是智能音箱更é‡è¦çš„是背åŽçš„è¯éŸ³åŠ©æ‰‹ï¼Œå¯ä»¥åœ¨éŸ³ç®±ä¸Šå±•çŽ°ï¼Œä¹Ÿå¯ä»¥åœ¨å†°ç®±æˆ–电视上展现;第二是现阶段需è¦æå‡ç”¨æˆ·ä½“验。

æ¨é™ï¼šè™½ç„¶çœ‹èµ·æ¥æœ‰ç‚¹æ…¢ï¼Œä½†æ˜¯æ™ºèƒ½éŸ³ç®±çš„确都在进æ¥ã€‚请å™å¯Œæ˜¥æ•™æŽˆç»™æˆ‘们预言一下,哪个智能音箱您比较看好?

å™å¯Œæ˜¥ï¼šè®¤çŸ¥æ—¶ä»£ç¦»ä¸å¼€è¯éŸ³çš„交互,一谈è¯éŸ³äº¤äº’就是智能音箱,有éžå¸¸æ¸…æ™°çš„ç†è§£ï¼Œå°†æ¥çš„市场éžå¸¸å¤§ã€‚现在教育一个最大的å˜åŒ–就是,未æ¥çš„书已ç»ä¸æ˜¯æˆ‘们掌上的电å书,ä¸æ˜¯å¤§å®¶åœ¨è®¡ç®—机里é¢çœ‹åˆ°çš„电å书,未æ¥ç”µå书一定是多媒体,è¦æ¿€å‘大脑的å„ç§æ„ŸçŸ¥çš®å±‚å½¢æˆå…±äº«æ•ˆåº”ï¼Œè¿™æ ·åœ¨å¤šåª’ä½“çŽ¯å¢ƒä¸‹ï¼Œå¦ä¹ 效率将大幅度æ高。å¦ä¸€æ–¹é¢ï¼Œå¥½çš„ä¹æ›²å¦‚果在电视里é¢æ”¾å‡ºæ¥å·²ç»å¤±çœŸäº†ï¼Œå¦‚果能跟音ä¹å®Œç¾Žç»“åˆï¼Œé‚£æ˜¯éžå¸¸ç¾Žå¥½çš„一件事情。诗æŒæœ—诵如果用手机去放,丢掉很多东西,如果有éžå¸¸å¥½çš„音箱展示,那感å—会å˜å¾—æ›´åŠ ç¾Žå¥½ã€‚å€Ÿç”¨ä¸€å¥è¯ï¼Œäººå·¥æ™ºèƒ½ä½¿æˆ‘们的未æ¥æ›´åŠ ç¾Žå¥½ï¼Œä½¿æˆ‘ä»¬çš„ç”Ÿæ´»æ›´åŠ ç¾Žå¥½ï¼ŒéŸ³ç®±åœ¨æ¤æ˜¯ä¸å¯æˆ–缺的。包括笔记本内的音箱,我希望它将æ¥è¶Šæ¥è¶Šé€¼çœŸï¼Œè¶Šæ¥è¶Šå¥½ï¼Œæœ‰ç«‹ä½“感。分布å¼éŸ³ç®±ä¸å…‰æ˜¯ä¸€é¢æœ‰ï¼Œå¯èƒ½æ˜¯ç«‹ä½“çš„å‡ é¢éƒ½æœ‰ï¼Œç¾Žå¦™çš„声音能够给我们留下深刻å°è±¡ï¼Œä½¿æˆ‘们一天生活充满阳光。

æ¨é™ï¼šç¾¤å‹æ出了一个切ä¸è¦å®³çš„问题,现在å„个巨头都推出了自己的生æ€å¹³å°ï¼Œè®©åˆ›ä¸šå…¬å¸ä¸å¥½ç«™é˜Ÿã€‚比如开å‘算法,一会儿在这个平å°ï¼Œä¸€ä¼šå„¿åœ¨é‚£ä¸ªå¹³å°ï¼Œæµªè´¹ç²¾åŠ›ï¼Œè€Œä¸”这也涉åŠåˆ°ä¸Šä¸‹æ¸¸çš„硬件或者销售ç‰ç‰é—®é¢˜ã€‚çŽ°åœ¨å¤§å®¶éƒ½åœ¨ç–‘æƒ‘ï¼ŒåŠ å…¥å“ªä¸ªæ›´å¥½ï¼Ÿ

å™å¯Œæ˜¥ï¼šéŸ³ç®±æ˜¯ä¸€ä¸ªç¡¬ä»¶ï¼Œéœ€è¦è½¯ä»¶çš„支撑,ç»è¿‡è½¯ä»¶å¤„ç†çš„å£°éŸ³å°†æ›´åŠ ç¾Žå¦™ã€‚ä¹Ÿå¯èƒ½åŒå…±äº«å•è½¦ä¸€æ ·ï¼Œéœ€è¦èƒŒåŽè…¾è®¯æˆ–者阿里巴巴的支æŒã€‚国内人工智能的大公å¸å°±æ˜¯BAT,国外就是谷æŒã€å¾®è½¯ã€Facebook,国内这些大公å¸åœ¨äººå·¥æ™ºèƒ½åº”用方é¢èµ·äº†éžå¸¸é‡è¦çš„作用。

è¿™å‡ ä¸ªå·¨å¤´åº”è¯¥æ˜¯ä¸ç›¸ä¸Šä¸‹ï¼Œä¸‹ä¸€æ¥è°èƒ½ç»™æˆ‘们带æ¥æœ€ç¾Žå¦™çš„享å—我们就支æŒè°ã€‚

æ¨é™ï¼šé»„ä¼Ÿï¼Œä½ èƒ½ä¸èƒ½æ›´å°–é”一点,更åšå†³ä¸€ç‚¹ï¼Œè¯´ä¸€ä¸‹å“ªä¸ªæ›´å¥½ä¸€ç‚¹ã€‚ä½ ä¹Ÿæ˜¯åˆ›ä¸šå…¬å¸ï¼Œå¦‚果将æ¥å¿…é¡»è¦å¯¹å¹³å°åšå‡ºé€‰æ‹©çš„è¯ï¼Œä½ 觉得哪个更é 谱一点?

黄伟:这是创业公å¸å¾ˆå¯èƒ½é¢ä¸´çš„选择,这个问题ä¸æ˜¯éœ€è¦åŒå‘选择的。我觉得BAT都有很大的机会,我更看é‡è°æœ‰æ•°æ®ï¼ŒæŠ€æœ¯çš„è¦ç´ 对最åŽçš„æˆåŠŸä¸æ˜¯æœ€é‡è¦çš„。相信BAT 有足够的资æºå¸å¼•ä¸€æµäººæ‰ï¼ŒåŒ…括我09å¹´ä»Žä¼ ç»Ÿçš„ITä¼ä¸šåŽ»äº’è”网公å¸ï¼Œä½™å‡¯åŽ»ç™¾åº¦æ›´æ™šï¼Œåœ¨ä¹‹å‰è¿™äº›BAT互è”网巨头里é¢æ‰¾ä¸å‡ºå‡ 个åšå£«ï¼ŒåŸºæœ¬æ˜¯ä¸ªä½æ•°ï¼ŒåŽæ¥å¾ˆå¤šäººè¯´è€é»„当时怎么没去BAT?那时候没有BAT,那时候是SBAT,盛大最牛,我去的盛大。盛大全公å¸çš„åšå£«å½“æ—¶ä¸ä¸€å®šæœ‰äº”个人,那时候的互è”网公å¸æ›´å¼ºè°ƒè¿è¥ã€‚今天å¯ä»¥çœ‹åˆ°æœ‰å¾ˆå¤šä¸€æµçš„科å¦å®¶éƒ½åœ¨äº’è”网公å¸é‡Œé¢ï¼Œäºº

Pet Bag,Portable Carriers Bags,Soft Pet Backpack,Pet Bag Backpack

Yangzhou Pet's Products CO.,LTD , https://www.yzpqpets.com

![[New Wisdom 100 People's Association] Seven masters talk about the status quo and pain points of human-computer interaction and terminal intelligence](http://i.bosscdn.com/blog/80/a0/96/6b03580ed64cae04a16353d6b5.jpg)

![[New Wisdom 100 People's Association] Seven masters talk about the status quo and pain points of human-computer interaction and terminal intelligence](http://i.bosscdn.com/blog/5d/22/79/237f285414882ad826e9ad77ff.jpg)

![[New Wisdom 100 People's Association] Seven masters talk about the status quo and pain points of human-computer interaction and terminal intelligence](http://i.bosscdn.com/blog/08/6c/87/f86c758a1cfee150e942665cb9.jpg)